Gravity Forms OpenAI

Use Gravity Forms submission data to interact with OpenAI – the leading provider of cutting-edge AI language models. Supports gpt-5 family, gpt-4o family, gpt-4-turbo.

March 11, 2024: Removed the completions and edit endpoints which have been deprecated by OpenAI. Feeds using these endpoints will be automatically converted to the chat/completions endpoint.

Hold up! There’s a better way.

We’ve evolved this little plugin into a premium add-on with AI superpowers. It’s called Gravity Connect OpenAI. You can use it to generate text, images, and audio using Gravity Forms fields and data. Explore the enhanced plugin.

Overview

Gravity Forms OpenAI is a free plugin that integrates Gravity Forms with OpenAI – the leading provider of cutting-edge AI language models.

This plugin allows you to send prompts constructed from your form data to OpenAI and capture its responses alongside the submission. You can also utilize the power of AI to edit submitted data for grammar, spelling, word substitutions, and even full rewrites for readability or tone. Lastly, GF OpenAI allows you to moderate submissions and flag, block, or spam undesirable content.

- Overview

- Getting Started

- Workshop Crash Course

- Using the Plugin

- Merge Tag Modifiers

- Integrations

- Known Limitations

- FAQs

Getting Started

Install plugin

GF OpenAI is available for free through Spellbook.

- Download and install Spellbook.

- Open Spellbook and search for “GF OpenAI”.

- Click Install on the GF OpenAI card — you’ll get free automatic updates and the latest features.

Need help? Check out our guide to installing your first plugin with Spellbook.

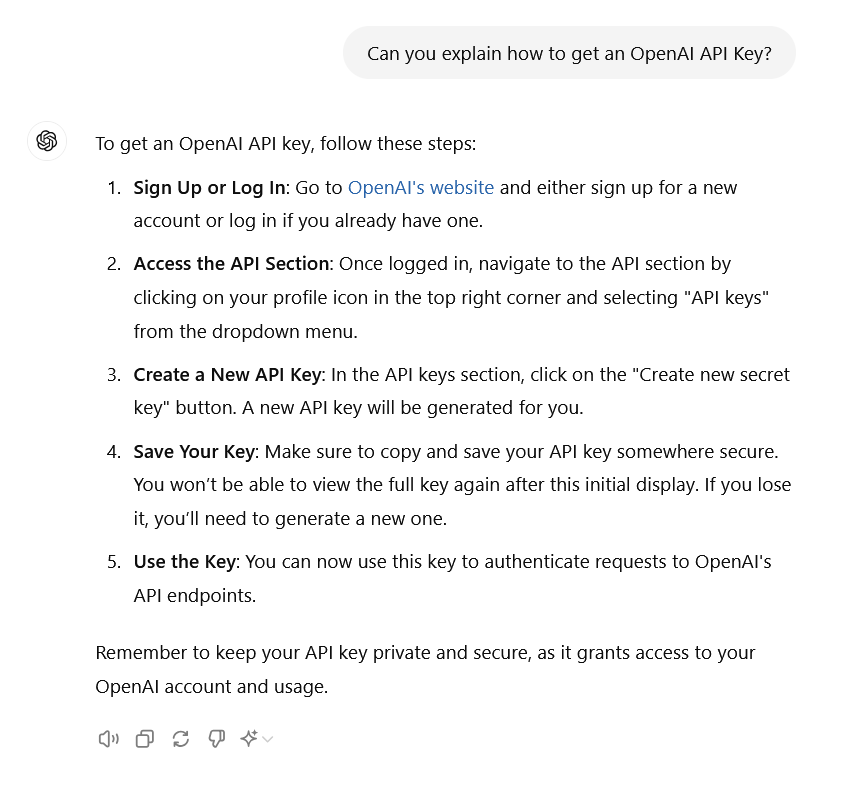

Get an OpenAI API key

Assuming you’re already using Gravity Forms, there’s only one thing you’ll need to get started with GF OpenAI: an OpenAI API key!

We’ll let ChatGPT (powered by OpenAI) explain how to get your own. 😉

Here’s a shortcut if you already have an OpenAI account.

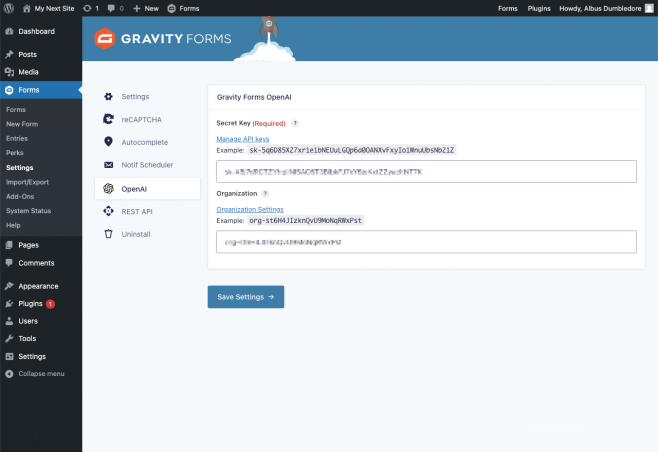

Once you have your API key, copy it and paste it into the GF OpenAI plugin settings by navigating to Forms › Settings › OpenAI.

Workshop Crash Course

Before we dig into the instructions for using this plugin, you may find our crash course workshop to be a useful starting point. Take a look as we explore some popular use cases, fine-tune models, explore how a marketing agency can use OpenAI with Gravity Forms, and answer questions from the audience!

Don't miss a thing. Get our weekly updates by owl or email. Ok, we're still working on the owl service, so just email for now.

Using the Plugin

Our plugin works with Gravity Forms in a few different ways to make it easy to use OpenAI’s powerful AI capabilities with your forms.

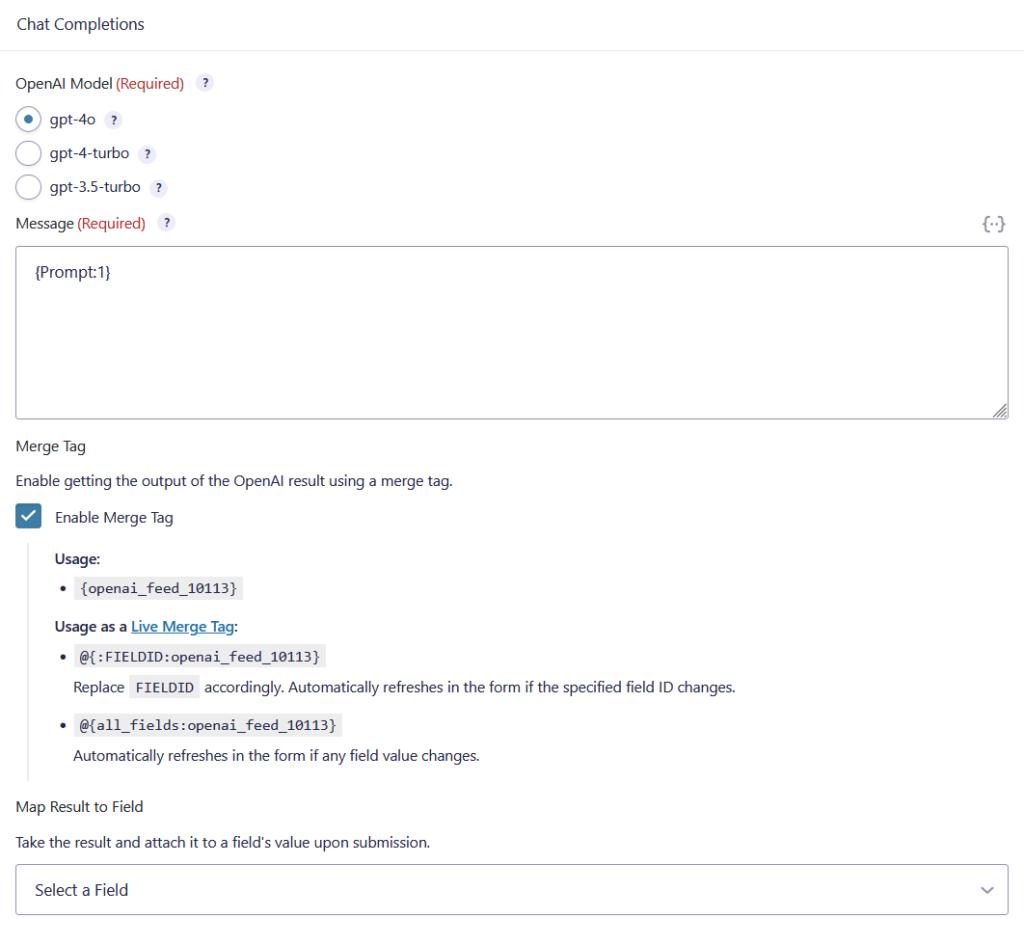

Chat Completions

Chat Completions is one of OpenAI’s primary endpoints, known for being both efficient and versatile. It’s also highly robust, able to handle and respond accurately to a wide variety of prompt types. In fact, it’s powered by the same models that drive ChatGPT, making it a reliable choice for diverse AI-generated responses.

Some great use cases for Chat Completions include: debugging code, generating documentation, and answering user questions!

OpenAI Model – Chat Completions has access to gpt-5, gpt-5-mini, gpt-5-nano, gpt-4o, gpt-4o-mini, gpt-4-turbo, and gpt-3.5-turbo. gpt-5-nano is the fastest and most cost-effective option from OpenAI.

Prompt – Any combination of form data (represented as merge tags) and static text that will be sent to the selected OpenAI model and to which the model will respond.

Merge Tag – Enable merge tags to output the result of an OpenAI feed in form confirmations, notifications, or even live in your form fields (via Populate Anything’s Live Merge Tags).

Map Results to Field – All OpenAI responses will be captured as an entry note. Use this setting to optionally map the response to a form field.

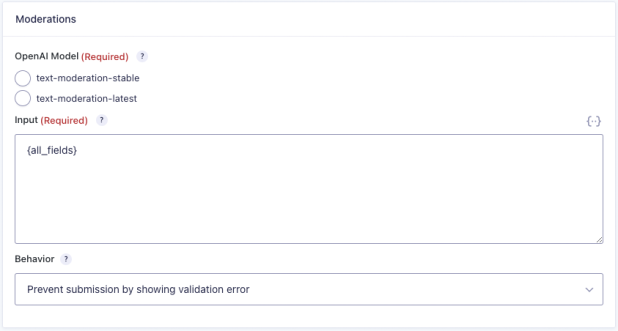

Moderations

Moderations allow you to check if submitted data complies with OpenAI’s content policy. This will flag inappropriate or harmful content and our plugin will let you decide what to do with that submission. You can block the submission, returning a validation error, mark the entry as spam, or record OpenAI’s response but do nothing.

OpenAI Model – Select the model that is the best fit for your needs. If you want the latest AI technology, use test-moderation-latest. Otherwise, stick with text-moderation-stable.

Input – Any combination of form data (represented as merge tags) and static text that will be sent to the selected OpenAI model for evaluation.

Behavior – Decide what to do if the submitted input fails validation. You can:

- Prevent submission by showing validation error

The submission will be blocked and a validation error will be displayed. Due to the nature of how data is sent to OpenAI, specific fields cannot be highlighted as having failed validation. - Mark entry as spam

The submission will be allowed by the entry will be marked as spam. Gravity Forms does not process notifications or feeds for spammed entries. - Do nothing

The submission will be allowed and the failed validation will be logged as a note on the entry.

Deprecated Endpoints

The completions and edits endpoints have been deprecated by OpenAI and removed from Gravity Forms OpenAI accordingly as version 1.0-beta-1.9. Any feeds using these endpoints will automatically be converted to the chat/completions endpoint.

Live Results with Live Merge Tags

If you’d like to show live results from OpenAI directly in your form, Populate Anything’s Live Merge Tags (LMTs) makes this possible. LMTs provide the ability to automatically replace a merge tag with live content as soon as its associated field is updated. LMTs work anywhere inside your form (field values, labels, HTML content) and can be used to process GF OpenAI feeds.

You can get the “live” version of your GF OpenAI feed merge tag by enabling the Enable Merge Tag setting on your GF OpenAI feed and copying the desired merge tag.

@{:1:openai_feed_2} → Will process the specified feed anytime the value in field ID 1 is changed. Update the “1” to any field that you would like to trigger the Live Merge Tag.

@{all_fields:openai_feed_2} → This LMT will be processed when any field value changes. This is useful if your prompt contains more than one field value.

Trigger Live Results by Button Click

When you use Live Merge Tags with an OpenAI feed, by default, the feed processes as soon as the merge tag value changes. This may not be ideal if the user is still entering data.

Thankfully, there’s a snippet that solves this problem. This snippet changes the default behavior to only trigger the feed when a button is clicked instead of when the input field is changed.

Merge Tag Modifiers

Include Line Breaks in Text

If you’re looking to include line breaks when using an OpenAI merge tag with an HTML field, you can include the :nl2br modifier in your merge tag code. Here’s an example of what the output looks like with and without the modifier:

Without the modifier, all results are on one line:

@{:1:openai_feed_2}

With the modifier added, each result gets separated on its own line:

@{:1:openai_feed_2,nl2br}

Integrations

Populate Anything

With the power of Live Merge Tags, you can process OpenAI feeds as your users type and display the response live in an HTML field or capture it in any text-based field type (i.e. Single Line Text, Paragraph, etc).

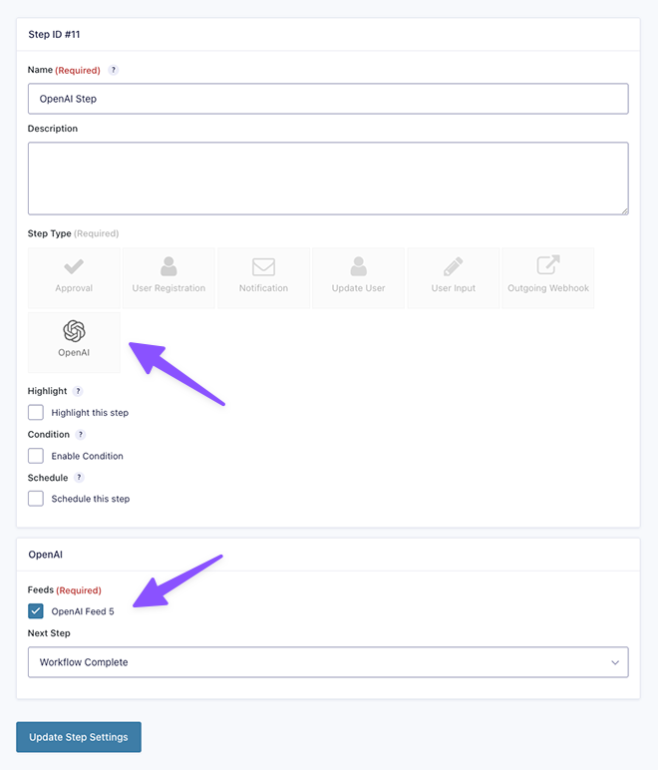

Gravity Flow

GF OpenAI is fully integrated with Gravity Flow. While you’re building your workflow, choose Open AI as the step type and select the OpenAI feed you’d like to use:

Here’s a quick example of what a workflow in Gravity Flow could look like:

- A user submits a form designed to create an outline for their article.

- GF OpenAI handles the outline.

- A Gravity Flow approval step requires an editor to approve the outline.

- After approval, a rough article draft is generated based on that outline via GF OpenAI.

- A Notification step brings users back to review the generated article, make edits, and submit a final version.

- Post Creation generates an article and publishes it.

Known Limitations

- Custom GPTs are not available via OpenAI’s API at this time so cannot be selected as a model via this plugin.

FAQs

How much does this cost?

Gravity Forms OpenAI (this plugin) is free! OpenAI (the service this plugin integrates with) also gives you $18 in free credit when you signup. After that, OpenAI is still remarkably inexpensive. As of Dec 15th, 2022, you’ll pay about $0.02 per 1000 tokens (~750 words) generated by OpenAI. Full pricing details here.

I need some inspiration… what can I do with OpenAI?

We’ve written a series of articles focused on leveraging AI inside Gravity Forms with an emphasis on practical, real world use cases.

- 5 ways you can use Gravity Forms OpenAI right now

- Sentiment analysis using Gravity Forms OpenAI

- How to use Gravity Forms OpenAI for content moderation

- Working with AI-generated content in Gravity Forms

- How to build a content editor with Gravity Forms OpenAI

Plus, OpenAI has an amazing list of examples here. If that doesn’t whet your whistle, try asking ChatGPT itself. You’ll be surprised with how clever it can be. 😉

I want to contribute to this plugin!

If you’re looking to contribute to the codebase, PRs are welcome! Come work with us on Github. If you want to contribute financially, pick up a Gravity Perks license. It’s a win-win. 😄

Hi,

I’m experiencing an issue with the plugin where I’m not receiving any response from OpenAI, even though the prompt is being sent successfully. Here are the details:

Could you please assist in troubleshooting this issue? Let me know if you need any additional information.

Hey Frank,

I’m unable to reproduce this locally, so we’ll have to dig into this further to know exactly what’s going on. If you have an active Gravity Perks license, please contact us via our support form so that we can take a closer look and see what’s happening here.

Cheers,

Your video is over 2 years old and the AI change has been considerable since then, you obviously reference the change in the blog itself. However, are there any more current videos showing examples of work that has been done using this (case studies etc) that are available?

Hi Nigel,

For a full changelog of the plugin over the past few years, I’d recommend checking out the releases page on Github

Unfortunately, I don’t believe we have any newer videos showcasing the plugin. That being said, I’d strongly recommend taking a look at our more recent Premium version called Gravity Connect OpenAI.

GC OpenAI has lots of new features, including streaming, image generation and support for OpenAI Assistants.

Please let us know if you have any questions!

Any update on Gravity Connect Plugin launch date? Looking for newer GPT models. Do you have any plan to integrate other AI models like Claude and Gemini?

Hi Praveen,

We expect to launch the Gravity Connect plugins later today. If you’re subscribed to our newsletter, you should receive an email about this when it’s live. You can also check back on our website, later today or tomorrow to get a license.

Currently, there isn’t any Gravity Connect plugin, that integrates with Claude or Gemini, but I’m happy to forward this to our Product manager as a feature request.

Best,

“I want to use the Gravity Forms OpenAI plugin. This plugin by default sends requests directly to the OpenAI API, whereas I have an API from another website that acts as an intermediary service. Is there a possibility within the plugin’s settings to change the default API address?”

Hi Ghanbari,

We currently do not have a solution to use custom API with our GF OpenAI plugin.

Best,

Would this be able to possibly parse information from uploaded files and put them into fields? We have a client that will have their customers send in files (not always the same format) and then we would want to take that information and break out the content to export into CSV format or even as just different data fields that we can build into a report.

Hi Jesse,

It isn’t currently possible to use this to parse information from uploaded files. However, I will ping our product manager so we can add this to our feature requests tracker.

Best,

Any update on the Image support? Also, how about GPT 4o minii support? Thanks.

Hi Winston,

We’re working on a new version of the plugin, which should add support for these. This should be included in our upcoming Gravity Connect suite of plugins, which we’re hoping will be available somewhere in August 2024.

Best,

First of all, thank you for this great plugin. Second i have a question. How can i add line breaks in output when I’m not using merge tags? I show ChatGPT answer directly in confirmation page of my GF. I really appreciate your answer. Rgds.

Hi Claudio,

You’ll want to map the output to a Paragraph Text field with the Rich Text Editor enabled, to preserve the formatting, including line breaks. You can then insert the merge tag of the paragraph field on the confirmation page to show it.

Best,

Loving this perk, superb work … quick question … could I make the OpenAI trigger on change of two form fields, as making it change every time all fields change becomes quite intensive.

Thank you!

Hi Paul,

It currently only supports triggering the OpenAI feed on change of a specific field, or using the all fields to work based on change of any field on the form. Setting it to work on change of two fields is currently not supported. I will forward this to our Product manager as a feature request.

Best,

Hi there,

Does this allow for any integration with Vision, to use GPT-4 to understand images which are uploaded through a Gravity form?

Thanks!

Hi James,

Images are currently not supported. We have plans to add support for images in the premium version of this plugin. The ETA, for the premium version, is somewhere around Q2 or early Q3 of this year.

Best,

How do you create multiple prompts for each task like Rewrite, Correct Grammer, Expand etc…

Hi Praveen,

If you want to use a single form with multiple potential prompts, you could store the prompts in a choice-based field like a drop down. You could then insert the merge tag for that field into the prompt in the feed.

If instead you’re referring to chaining prompts so that the result of one prompt is fed into another prompt, you would need to use multiple forms to accomplish this. You could use GP Easy Passthrough to pass through values from one form to the other.

Is there a way to activate the feed from within a GravityView? I have openAI feeds working well in the form, but it would be a great benefit if when the user is making changes in GravityView that the AI feed would process again using the same settings.

If it does do this, I’d be very curious to learn how to fix my process.

Hi Glen,

It appears processing GF Open AI feed is currently not supported. I’ll forward this to our product manager as a feature request.

Best,

Haven’t tested, Glen, but this might do the trick: https://docs.gravitykit.com/article/534-trigger-feeds-edit-entry

Any update?

Hey Ana,

This has been forwarded to our Product Manager as a feature request. You can try giving David’s suggestion a go and see if that works for you:

https://docs.gravitykit.com/article/534-trigger-feeds-edit-entry

Is there a way to train the AI model on my GF entries? And then use this perk for querying?

Hi Marjan,

Unfortunately, it’s not possible to use Gravity Forms with our OpenAI plugin to train the model, but what you could do is train the AI outside of the form and use that trained model with your form.Here’s instructions to train and fine-tune model. Once you have a trained model in your account, it will show up as an available model in the feed settings.

I hope this helps.

Best,

David, this is brilliant work. I have a few questions, and maybe some enhancements to propose – what is the best channel for this?

Thanks, D

Hi Dan,

You can send your questions and feature request via our support form.

Best,

This used to work perfectly, and now I see that my form does not work at all. It produces no results, and I haven’t changed anything. I did try changing to Chat GPT 4, but still nothing. Any ideas why? No errors are dropping in the console.

Hi Elsie,

We recently update the plugin. Can you download a new copy of the plugin and use it to update the one you have and see if it resolves the issue? Also, you may want to check if your OpenAI account still have credits.

Best,

I have a quick question. I have created a form where information is put in about upcoming events along with their dates and then ChatGPT will shorten and abbreviate words for me so I can put it up on a sign outside the school. The character limis is set to 150 characters in the ChatGPT Instructions. Unfortunately the character count that CHATGPT comes up with doesn’t match with Gravity Forms character count (as of right now i set the gravity forms character count an additional 30 characters to have it outpout the right amount of characters from what is returned from ChatGPT. Is there a fix for this besides what I am doing presently? https://orientsd.org/reader-board/

Hi Jair,

Unfortunately, ChatGPT isn’t great about counting characters and respecting limits you’ve set. You might need to get creative with your prompt. For example, I have had some luck with prompts written like this:

Sentiment Analysis: The model

text-babbage-001has been deprecated, learn more here: https://platform.openai.com/docs/deprecationsall are now depreciated

Thanks for the heads-up about this, Jerome. We’ll contact the product team about it.

Hey Jerome, we’re working on an update to the plugin that deprecates all models that are no longer available. 👍

Any update on releasing an update?

Hoping to have the new update ready by next week. 👍

Great, thank you! Looking foward to using this with various projects.

Hi guys. Great article. The use case I am looking for is the ability to send off whole gf datasets for analysis by OpenAI. Eg send the json response from a GForms API request, and ask OpenAI to identity tends and patterns in the data. I tried doing it custom using their api but sending files is a bit tricky as you need to create an Assistant and then get the file id etc etc so I gave up! Do you have any grand plans to add functionality to send off larger sets of form data?

Hey Adnaan, no specific grand plans for sending large datasets to OpenAI but I’m curious what your expected flow for this would look like?

Is there a plan to update this plugin with the latest non-deprecated GPT models?

All of the ones available for selection via the plugin feed configuration screen are deprecated as of today :(

Hi Gil,

If you update to the latest version of the plugin, you can use the Chat Completions endpoint. You can download the latest version of the plugin at the top of this page. We’ll be removing the deprecated endpoints from the plugin soon.

Any plans to integrate this with custom models, allow images, and or maybe integrate with meows ai engine plugin to use it with custom models?

Hey Nathan, we have big plans for this little guy. 😄

By custom models, I assume you’re talking about a fine-tuned model? If so, these should already be showing up in your settings if they’re owned by the same account for which you’ve entered the API key in the plugin.

Images are coming in our premium version of this plugin. I’d expect to see something here around Q2.

Can I use this to moderate content from profanity and adult content?

Hi Wissam,

Absolutely. We wrote an article detailing how to set this up on the Gravity Forms blog.

I was curious if there was any documentation on this to account for the email I received from OpenAI — I saw that the latest version of the plugin should have v4 available, and it says I need that active on my account; but I can’t see how to do that:

Earlier this year, we announced that on January 4th, 2024, we will shut down our older completions and embeddings models, including the following models (see the bottom of this email for the full list): text-davinci-003 text-davinci-002 ada babbage curie davinci text-ada-001 text-babbage-001 text-curie-001 text-davinci-001 You are receiving this email because in the last 30 days…

According to OpenAI’s docs, GPT-4 API access is available to any user who has made a successful payment of $1 or more.

Assuming you qualify there, GPT-4 should be selectable as a language model when setting up a feed. You’ll first need to select Chat Completions as your OpenAI Endpoint.

I took a closer look at this, and I am thinking that it is not based on a deposit, but rather based solely on the spend amount. Meaning, I deposited $10 for testing, but I am still only at 60 cents on the actually usage. I am hoping that once that goes over $1 that option will be available. I will come back here when I get over that threshold to confirm.

Thank you, Anthony

Hi Anthony,

It might be worth checking with OpenAI support on that. When I tested this, my account didn’t have a monthly spent above $1. Once I added the minimum number of credits ($5) to my plan, GPT-4 was available for use.

Hi,

I can’t seem to generate responses using live merge tags if I use the GPT 4 32k Chat Completion option. Only seems to work if I use GPT 4 (not the 32 k version). Any ideas why this would be?

Is there any ETA on when GPT 4 Turbo will be available as an option?

Hi James, we’ve followed up via email. We’ve already passed this on to our development team. About GPT 4 Turbo we don’t have an ETA yet.

Best,

I am actually in the same boat; I was just curious if this was resolved?

Thank you, Anthony

Hi Anthony,

I don’t know if you already have this, but we released an update to GF OpenAI that adds GPT-4 Turbo. You can find it here: https://github.com/gravitywiz/gravityforms-openai/releases/tag/1.0-beta-1.8

Best,

I apologize for the long delay on this, but yes, I do appear to be using the latest version.

We’ve followed up via email Anthony to check what could be going on.

Will there be any support for GPT4 Vision? It will integrate perfectly with the File Upload Pro plugin

No definite plans but it is enticing! Agreed that this would be a nice complement to File Upload Pro. 🙏

Hey guys,

I have a question: is it possible to give output from OpenAi back to user after payment has been processed?

Example: users fills in several fields, selects a few options and pays money. We give them an OpenAi generated answer back.

Hi,

You could insert the openai merge tag on the confirmation page to display the openai response after submission. Since the confirmation page will be displayed after payment is completed, the openai content will show after payment is made.

Best,

Hello, thank you for the great plugin you’ve created. I wanted to know if you have a program for this plugin to connect to DALL·E as well? Do you have any updates for it?

Hi, not at this time, but I’ll pass it on as a feature request to our product manager.

I don’t see the option to use GPT-4. It only shows me these options:

text-davinci-003 text-curie-001 text-babbage-001 text-ada-001 code-davinci-002 code-cushman-001

What am I missing?

Hi Luke,

If it’s been a while since you installed the plugin, download the Gravity Forms Open AI plugin again to get the latest version. On the feed’s page, you should see the Chat Completions Endpoint, select that to show the GPT-4 model.

Best,

Just wanted to say thank you for the wonderful plugin. It has helped me to get a lot of things done :)

Hi KA,

You’re welcome. Glad to know the plugin has been useful to you.

Best,

When I put many options for analysis in the prompt, the data transfer speed decreases and it takes a long time to display the results to me. When I only put one option from the form in the prompt, it determines the result in less than 30 seconds, but when the options increase, it takes more than 2 minutes. I would appreciate it if you could suggest how to solve this problem.

Hi Eli,

API requests to OpenAI can be quite slow, and the speed is dependent on the amount of data that is fed into the request. There’s a lot of processing that is happening behind the scenes. You can use this snippet to make the feed asynchronous. This should allow the form to submit faster and process the Open AI feed in the background.

I want to receive 10 different suggestions from ChatGPT and display each suggestion in a checkbox so that the user can select whichever they want. However, when I place the code in the options, it combines all the choices into a single selection instead of separating them. Do you have any ideas on how I can solve this issue?

Hi Aras,

I’m unsure if this is possible. If you an active Gravity Perks license, submit a ticket for this via our support form, so that we can forward this as a feature request and have our developers look into this.

Best,

Thank you for the great plugin you’ve created. I have an issue with the multi-page form. When I submit the form and the result from the API is displayed on the last page, I want the user to reach that page after filling in a series of information and see the result based on the provided data. However, when the user fills in their information, such as their name or work experience, the “Next” button takes a long time to become active as it analyzes the information. Is this issue related to the plugin and is it a normal occurrence? or does it have to do with the speed of my server and the processing of information from my server’s side?

Hi Mo,

API requests to OpenAI can be quite slow given the amount of processing that is happening behind the scenes. You can use this snippet to make the feed asynchronous. This should allow the form to submit faster and process the Open AI feed in the background.

If this doesn’t work for you, we’ll need to take a look at your setup, and for that you’ll need a Gravity Perks license. Please note that while the plugin is free, support is not. We’re happy to answer questions here, but help with specific configurations requires a Gravity Perks license.

If you have one already, we’ll be happy to help if you contact us via the support form.

Best,

I want to create an extension that, when a user enters the name of a job, searches the internet and lists job advertisements related to that job. Can this be done if I connect this extension to the GPT-4 API?

Hi Mo,

This will depend on the capabilities of GPT-4. I suggest you test this on the ChatGPT platform and see if it’s possible for GPT-4 to search the internet for jobs matching a keyword. If the result is positive, you can use our GF OpenAI plugin to connect Gravity Forms to the GPT4 API and return the results on the confirmation page.

Best,

Hi Dario, as you might remember, I’m working on a project where I need to use OpenAI’s ChatGPT within a form to suggest modifications to user inputs. This process happens three times in sequence, meaning I have to call OpenAI’s ChatGPT three times and use three live merge tags to display the resulting suggestions to the user.

I’m struggling with two key challenges:

1) Figuring out the best format for the form. Would a multi-page form work best?

2) Preventing all live merge tags from updating simultaneously every time the form progresses.

Using the workaround you provide, I’ve ensured the live tag updates only when the user clicks ‘next’. However, this still triggers an update for all OpenAI-connected live merge tags all the time and seems to bog things down.

Ultimately, I aim to incorporate three or four OpenAI-connected merge tags or feeds within a single multi-page form. Is this objective feasible? I’d appreciate any advice on this.

Sorry to pepper you with this: my live merge tags are populating with some weird values as soon as the page loads.

I want them to wait out until a specific checkbox (Field ID 3) in my form (Form ID 49) is checked. I tried some JavaScript magic to make the GPPA listener take a break when the page loads and only get back to work when the checkbox is changed. But nope, those live merge tags are still doing their own thing right on page load.

Oh, and I’m using OpenAI for some feed stuff, and I’ve made it asynchronous to speed things up with form submissions.

Any ideas on how I can get this to work? Is there any secret sauce in Gravity Perks, like built-in filters or actions, that I could use to control these live merge tags? Any other tips or tricks?

Hi Marc,

Both a single-page form and a multi-page form will work just fine. However, I’m unsure if it’s currently possible to prevent all the live merge tags from updating simultaneously every time the form progresses. I’ll forward this to our product manager as a feature request, and we’ll get back to you with updates on this.

Best,

When I map the AI output to a field, it removes all the formatting that ChatGPT has created.

I’m pulling the mapped field into a GravityView layout so it can be displayed in our client’s account, but the formatting being gone is making it just look like a blob of text. I tried using the :nl2br merge tag to add breaks but it didn’t work because it is being mapped via the back end Ai settings. Any ideas on how to keep the line breaks and formatting for the mapped field?

Hi Rachel,

You’ll have to map the AI output to a Paragraph Text field with the Rich Text Editor enabled to preserve the formatting from ChatGPT.

Best,

When I integrate Live Merge Tags with an OpenAI feed in a multi-page form, the displayed information from the feed tends to update multiple times, seemingly reflecting different thought stages of ChatGPT, until the OpenAI API process completes. How can I modify the setup so that only the final output is displayed? FYI: I’ve already implemented a snippet to initiate the API call only upon clicking the ‘next’ button.

Hi Marc, we’ve already followed up by email with a snippet to process OpenAI feed async.

This plugin is causing ERROR 500 when you try to edit Limit Submissions Feeds from GFLimit Submissions plugin!! Once you deactivate OpenAI plugin it works.

Hi Maradona,

This isn’t a known issue so we’ll have to dig into this further to know exactly what’s happening. If you have an active Gravity Perks license, you can contact us via our support form so that we can ask some additional information and look into this further.

Best,

Thank you for a great plugin. I am using it with a form that uses the plugin to pass some data to the api and then save the response to a custom post type. It works great but takes a couple of minutes to complete. Is there a way to immediately send the user to the confirmation screen and run the api call in the background? Just curious.

Hi Steve,

We have a snippet that will process the OpenAI feed asynchronously.

Following up on Ali’s question about Azure, we have a use case that needs GPT for our private data, not for the general ChatGPT.

The Azure team seem to support that as per this link: https://techcommunity.microsoft.com/t5/ai-applied-ai-blog/revolutionize-your-enterprise-data-with-chatgpt-next-gen-apps-w/ba-p/3762087

It would be really great if we could be a Gravity Forms OpenAI customer and be able to link to an Azure-hosted dataset and have a similar usage based pricing model with Gravity WIZ.

Do you think this is possible in the near future? Would much rather wait than to chase off on a major one-off development project.

Thanks!

Marty

Hi Marty,

This is currently not in our plans in the near future. I will still forward this as a feature request. Hopefully, with more similar requests, this may be a priority.

Best,

Hello!

Any chance we can use Azure? Having some issues with Openai but have azure api access.

Hi Ali,

This is currently not possible. I will forward your request to our Product manager as a feature request.

Best,

thank you! I’ve also heard that because of extreme load on chat gpt, azure might offer better speeds.

Does anyone have an example of executing this from a button click? I can’t seem to get the code to work.

Hi Conor,

I’m not sure I’m tracking this question. When the submit button is clicked, the OpenAI feed processes and the results are stored in the field that has been mapped in the feed settings.

If you’re having trouble using the plugin and are a Gravity Perks subscriber, reach out to support. We’re happy to help.

Hi,

Any updates on the GPT 4 support in the plugin? Saw that you are working on it beneath my last question.

Hi Harry,

Support for GPT 4 has been added to the Open AI plugin. Download the plugin again to get the latest version. It requires your account have GPT-4 enabled.

Best,

Hi

Sorry if this question is too easy. I have installed this plugin and configured it. I now see GPT answers in my entry list. How could the user see the GPT answer of his question in the confirmation page?

Thanks

Hi Karl,

If you’ve mapped the result to a field, enter the merge tag of the field in the confirmation page. If not, enter, the openai merge tag in the confirmation page.

Best,

First of all, I would like to apologize for making some many questions. And at the same time, would like to thank you for promptly answering all of them. I find so many useful possibilities for this tool.

How difficult/impossible would it be to integrate it into GravityCharts? I was wondering if it could be used to generate (different kinds of charts) charts based on the prompts.

Hi Claudio,

This isn’t currently planned, but I’ll pass it along to our product manager.

Hi,

Is gpt 4 already usable in the plugin?

Hi Harry,

Not yet. We plan on adding support for GPT-4, but we’re still waiting for access.

Hi @GravityWiz We can provide you a GPT4-API-Key for providing support for GPT4. Just contact us.

We have one now and are working on the adding support for GPT-4 to the plugin as we speak. 😄

Hi GravityWiz, thanks for this great plugin, very interesting to explore the possibilities of AI within Gravity Forms.

I recently watched your ‘OpenAI Unleashed’ workshop and was particularly interested in the marketing and AI section. There was one idea in here where Cole had a mock-up of a form for content optimisation, whereby the user inputs a piece of content into one field and then some checkboxes underneath for suggestions such as ‘Readability’, ‘Wordiness’, ‘Clarity’, ‘Tone’, ‘Keyword Density’ etc. Initially I have tried the following prompt (and multiple variations of the prompt):

“Provide suggestions to optimise the below content. Separate into a list with the following sections: Readability, Wordiness, Clarity, Tone, Keyword Density

{Content to Optimise:1}”

But it is just outputting them as a list with generic explanations for those sections, rather than it being specific to the content from the field. The above prompt works fine within ChatGPT itself.

It may be that I am just missing something obvious, but I have tried many different prompts and can’t seem to get it to work for, wondering if you have any suggestions?

Also the next step would be replace the hardcoded sections with values from checkboxes. Assuming this works, would it be a case of using the merge tag for the checkbox? What about the case where a checkbox hasn’t been checked but the merge tag is still passed to the prompt?

Any help/advice would be really appreciated, thanks!

Kind regards, Adam

Hey Adam,

Thanks for reaching out.

If the prompt works as intended in ChatGPT, this sounds like an issue with plugin configuration and/or making sure those the inputted field values (i.e. your content yet to be optimized) are being sent to OpenAI and back.

Have you ensured your OpenAI feeds are configured correctly by testing that they works with other, simpler prompts and field inputs?

After that, I would recommend moving the merge tag around within the prompt and reducing it to a single request first (i.e. just requesting clarity, or tone, etc) to see if this provides a result relevant to the text in {Content to Optimize:1}. Once you’ve confirmed this works, you can start adding more.

Let me know how this goes and I can help further.

Regarding your second question — have you explored using this in part with Populate Anything’s Live Merge Tags?

https://gravitywiz.com/documentation/gravity-forms-populate-anything/#live-merge-tags:~:text=The%20Frontend-,Live%20Merge%20Tags,-When%20GF%20Populate

Thanks Adam!

PS — Feel free to reach out directly at support@gravitywiz.com.

Hi Cole,

Many thanks for your detailed response! Yes I have a few other test forms such as a blog post generator (from certain input fields e.g. keywords, target audience, tone, etc.), one for sentiment analysis like in the workshop and another for content summarisation.

These are all working correctly, so I think you’re right about it being the prompt/placement of the merge tag in the prompt that is the issue. I have tried a few combinations and reducing it to request just one optimisation and it has been a bit hit and miss still. It has output something I would expect at times and not at others.

Therefore I would say it’s a case of trial and error before getting the right prompt, given that it is working for other feeds, so I don’t think it is a configuration problem with the plugin specifically, maybe also I need to try adjusting the other settings like Max Tokens, but for now I would say I will try a few more iterations of the prompt and see what happens.

It is also not something essential I am trying to do, as it is more experimental at the moment, as I work at a University in the UK and we are currently researching various AI tools for our department. We already use many Gravity Forms across the site, so I thought this would be a good use case to trial how this works and maybe we will go further with it and in that case I think looking into something like the populate anything extension could be another potential use case for us.

Many thanks again for the reply, your advice is appreciated and if I have anything else, I will reach out to your support!

Hey Adam,

Thanks for the response. Sounds like an exciting use case. I’ll second that — it sounds like you may have to tinker a bit more with your prompting and configuration. Wishing you the best of luck though, we’re always here otherwise.

And one other thought — if you do find yourself creating something you’re excited about and want to share it with us, we’re always open to feature customers in Gravity Wiz Weekly! Just drop us a line if so.

Cheers!

Hi guys – loving this plugin! Having a little issue though and not sure if anyone else has the same when trying to view paragraphs of text from AI on a results page…

I am using Completions and Mapping Result to a Field (in my case a paragraph field). I can see on the entry details Open Ai has created 3 separate paragraphs, but in the field itself there is no paragraph breaks at all, and so the output is one continuous block of text (see what I mean on https://smadigital.app/6steps-actioncoach/quiz-complete-thankyou/?geid=93&eid=02a465 towards the middle of the page).

Any help is super appreciated!

Hi Steve,

To keep the paragraph breaks, enable the option to use the Rich Text editor under the Advanced tab of the Paragraph field setting.

Best,

Something I noticed is that I cannot change the order of the feed once is created. If that feed depends on the value of a different feed that was created after, it will not show the result. It would be good to implement the “click and drag” option to change the order of the feeds

Thanks for the feedback, Claudio. I saw that you submitted an issue as well. I’ll link that here for other folks to weigh in on and we’ll prioritize accordingly.

https://github.com/gravitywiz/gravityforms-openai/issues/10

I was wondering when GravityWiz was going to officially add DALL E support to this plugin.

It looks like it’s in the code last I looked, but isn’t an option to use, unless I missed that announcement. I thought it was to be integrated with another GravityWiz add-on related to uploads. This would be very useful!

Hey Alexander, we do have plans to add DALL·E support but it hasn’t arrived just yet. We did start to explore it but the code that’s currently in place does not work. No definite ETA but customer demand is hugely motivational for us so thanks for letting us know you’re waiting for it. 🙂

Hi I have installed the gravity main plugin and also open ai integration plugin. I tried to use edits mode.Here are my questions: 1) should I always write in input {Prompt:1} ? what does it mean exactly {Prompt:1}? 2) can my users correv=ct their mistakes with this programm?

Hello! The input for the Edit endpoint would be the merge tag for whatever field you want to be edited by the AI. You can use the merge tag selector (the {..} icon directly above the field, top right) to select the desired field and input its merge tag into the setting.

If you want to overwrite the user’s original input with the AI-edited input, you could use the “Map Result to Field” setting to save over the original input. If you want to present the suggested edit to the user, you could use an HTML field and the Live Merge Tag version of the GF OpenAI feed (looks like

@{:FIELDID:openai_feed_123}) to show the suggestion and let the user decide whether to implement it. Please note, live results requires Populate Anything.I am facing problem, its’ giving same answer all time. I made a simple form with one text input field and one output field. I am using chat completion with gpt 3.5 turbo. Here what I wrote in message requirement section “Write SEO optimized title only with this keyword “{Input:1}”. I am always getting same title with same keyword.

Hey Philip, results are cached for 5 minutes by default. I’ve sent you an experimental build of GF OpenAI with support for a filter to disable this caching (check your email).

Is it possible to include the values of each field from my form in my prompt? For example, “The user {user} is {age} years old and needs a health plan. The chosen plan is {chosen plan}”…

Hi Claudio,

Definitely, you can combine static text with merge tags in the prompt. The entire prompt is sent to OpenAI when the form is submitted or live merge tags are processed.

Is there a way to persist a chat in Turbo? ex: I have a workflow that has an initial prompt that is fairly detailed, then use the results of that to generate several others. Rather than sending the results as part of each subsequent prompt, I’d like to just add additional queries.

Sounds like you’re looking for something more ChatCPT-like? If so, we don’t currently have a solution for that but it’s on our radar.

Something like that yes. Using a fairly complex prompt as a setup, then generating several different types of content based on the results of the first prompt. Asking for it all at once is more tokens than allowed.

I’ll pretty sure you could accomplish this using copycat, copy the response to another field which then runs multiple prompts from the response.

This is doable with a combination of CopyCat, Form-Pass-Thru, and Live Merge Tags. You might be able to do it with less than these 3 components as well.

I know some people had ideas of how to get this working. But has anyone done it? Where they submit a request to the AI, get a response, and then send a follow up request and the AI takes into account the original prompt, it’s first response, and the second prompt?

I use live merge tags in my gravity forms to populate some text fields with openai. Unfortunately, when click the submit button of my form, all the text fields with a live openai merge tag, are submitted to openai for the second time (making me billed twice). is there a workaround to prevent this from happening? Thank you for your amazing plugin and free support.

Hi Sara,

This sounds like a configuration issue. Do note that while the plugin is free, support is not. We’re happy to answer questions here, but help with specific configurations requires a Gravity Perks license.

If you have one already, we’ll be happy to help if you contact us via the support form.

Hi , i am using the plugin with Completions. With the {openai_feed_1} i am getting my answer back and works fine when i check it in Notes ! BUT i created also 2 notifications and when i add the {all_fields:openai_feed_1} in the Notifications, Then the email arrives with a Different answer than the 1 in Notes. In Notes is better !

Why is that? Does it call more than 1 time the API when i am having Notifications with the {all_fields:openai_feed_1} too ?

Thank you in advance

Hi Antonis, when testing locally, it seems to work as expected. I get always the same output. This will probably require some digging on your setup. If you have an Advanced or Pro license, you can reach out via our support form, and we should be able to help.

Hello, a multiline result save in a text field. Unfortunately, this is then output as a single line in notifications. Is there a solution for this?

Hi Oliver, we’ve already followed up via email to help you here.

Upcoming version introduces the :nl2br modifier for better control over when new lines are honored or ignored.

Hi, any plans to integrate the new model: gpt-3.5-turbo

Thanks

Best, Oliver

Hi Oliver,

Support was added in the latest release 1.0-beta-1. You can download it using the Download Plugin button above.

You must select the new Chat Completions endpoint for the model to be available.

Lifesaving plugin! To me, with livemergetags, if I exceed openai token limits, no error messages are displayed to the end user (and of course, no text is generated). is there a hook in your openAI plugin or gravity forms or live merge tags, that I can connect to so I can write custom code to send my long text in chunks to OpenAI and then return all the processed chunks in one piece to the user? If this is insane, any other alternatives (such as embeddings) that can be somehow used with your extension? Thank you

Hi Sara, I’ll ping our product manager for an investigation about this feature.

Cool idea but no solution for this just yet.

Unless there is a better way, I got embeddings to work by hooking into the gf_openai_request_body plugin filter of this plugin, gathering the prompt text, using curl to get the prompt’s embedding vector from OpenAI, sending it to the vector db, getting results back and formatting it as appropriate, then edit in that plugin filter the formatted results so that what it sends to OpenAI is your embedding results + your prompt.

Thanks for the feedback Alex!

Can we connect this to the GPT3.5 turbo API that came out this week? or DALL-E2 for images?

Do you have plans to implement the new ChatGPT API to the plugin? Currently using text-davinci-003, but I think that gpt-3.5-turbo can help me create a better crafted output.

Heck yeah, Freddy. It’s available in the latest version above. 😉

Do you have more in depth documentation on how to use this plugin? I’m pretty lost… haha I know what I’m trying to build, but got very lost trying to get there ;-}

Hi Michael, if you have an Advanced or Pro license, you can reach out via our support form and we should be able to help. We will have a Workshop on March 2nd about Open AI that you can join too.

Best,

Is there anyway to do conditional logic inside the actual prompt? Does the gravityforms notification/confirmation shortcodes work?

Hi Lewis,

There isn’t, but we’d love to hear more about your use case. Can you reach out to us via our support form? We’ll see if we can come up with a solution for you.

will do,

with regards to the fine-tuned model that you have added support for how exactly do we get that to work I cant see any documentation. Thanks :-)

Hi Lewis,

You’ll need to train the fine-tuned model. Once you have a trained model in your account, it will show up as an available model in the feed settings.

I created a form. And enabled merge tags. And like “Roxi” who posted on Feb 7th. I am getting a blank page. The form uses text-davinci-003 model, entered the prompt with variables from the form, and added the {openai_feed_1} merge tag to the confirmation page.

Nothing. Is there not a tutorial on this out there yet. For us non-computer science majors, this is basically likely trying to translate Greek. Hope you can help.

You can cancel this. I got it to work. Still a lot to figure out though. Thanks.

Thanks for the heads up Steve!

Hi,

I’ve created a form using the text-davinci-003 model, entered the prompt with variables from the form, and added the {openai_feed_1} merge tag to the confirmation page.

However, the output is not being displayed.

Can you help resolve this issue?

Thank you.

Hi Roxi,

I tested this configuration and it is working for me in a local environment. If you’re a Gravity Perks customer, we’ll be happy to help you with this. Drop us a line.

Hello,

I have what I hope is a quick and easy question. How are the Moderation feed and the Completions feeds supposed to work together? It seems like a Moderation feed should process first and then – if it doesn’t fail – trigger the Completions feed with the same content. I don’t really see how to do that. Is that how they are intended to work together?

Thanks!

Hi J,

They don’t currently work together. They are separate endpoints in OpenAI, so they can’t be processed together. Your best bet for this kind of workflow is to use two separate forms: one for moderation and one for completion. You could then use Easy Passthrough to pass the values from the first form to the second.

is there any video how to set merge tag for opeanai feed and check this ?

Hi Krishna,

We currently don’t have an official video for this, but we have an upcoming Workshop on 2nd March 2023 where we will address all these. In the meantime, I’ve sent you a link to a video with a similar use case on how to set it up via email. I hope it helps.

Best,

Is there a way to get 2 or more Open AI feeds to work on a single form submission? I have two response fields with each assigned to a feed. However, the second feed always generates a random response instead of capturing the information from the first feed.

Hi Umar,

I replied directly to you, but I’m posting my response here in case anyone else runs into the same issue.

The latest version of the plugin fixes the random response issue. The second feed does not populate “live” but will populate when the form submission is completed.

Hi there. This is so awesome. Thank you. I see the plugin now supports using custom/fine-tuned models. I know this is a big ask, but is there any chance please of providing a tutorial on how to do this? Thanks again. Richard.

That would be awesome. We’ve already followed up via email, Richard.

Best,

Hey Richard, not something we can do on the fly but we’ve been getting lots of similar requests around this plugin so we’re planning to dedicate our next workshop to all things GF + OpenAI.

Just be clear our actual next workshop is already scheduled so by next I mean the workshop after that one. 😄

I’m getting this error.

Fatal error: Uncaught Error: Call to undefined method WP_Error::is_old_school() in /home/customer/www/shayanahmedlatif.com/public_html/wp-content/plugins/gravityperks/gravityperks.php:494 Stack trace: #0 /home/customer/www/shayanahmedlatif.com/public_html/wp-content/plugins/gravityperks/gravityperks.php(573): GravityPerks::get_message(‘register_gravit…’, ‘gravityforms-op…’) #1 /home/customer/www/shayanahmedlatif.com/public_html/wp-includes/class-wp-hook.php(310): GravityPerks::after_perk_plugin_row(‘gravityforms-op…’, Array) #2 /home/customer/www/shayanahmedlatif.com/public_html/wp-includes/class-wp-hook.php(332): WP_Hook->apply_filters(NULL, Array) #3 /home/customer/www/shayanahmedlatif.com/public_html/wp-includes/plugin.php(517): WP_Hook->do_action(Array) #4 /home/customer/www/shayanahmedlatif.com/public_html/wp-admin/includes/class-wp-plugins-list-table.php(1336): do_action(‘after_plugin_ro…’, ‘gravityforms-op…’, Array, ‘all’) #5 /home/customer/www/shayanahmedlatif.com/public_html/wp-admin/includes/class-wp-p in /home/customer/www/shayanahmedlatif.com/public_html/wp-content/plugins/gravityperks/gravityperks.php on line 494

I apologize for the trouble!

What version of Gravity Perks are you using?

I’m using a 2.6.9 version of gravity forms.

Thanks! It looks like you have an active license. I’ll follow-up with you via email.

Is there any way to display the API response in the body of any page or post after submission? i.e. not merging it into a form field.

I would prefer not to do this by passing the value in the querystring.

Hi Danny,

You can use the feed’s merge tag and display it in a confirmation. If you wish to display it on a page after submission, our Gravity Forms Post Content Merge Tags perk is a great fit.

Im advising to – add to map fields of PROMPT_TOKENS, COMPLETION_TOKENS,TOTAL_TOKENS used so we can exctract data from the returned response — i mean i make a field maybe (tokenused:3) which will capture amount of tokens and maybe charge users per tokens used

Hi there,

Thanks for the feature request! This is a good one.

We’ve added a new “raw” modifier in version 1.0-alpha-1.6 that you can use with the merge tag provided by the OpenAI feeds.

Example usage:

{:1:openai_feed_2,raw[usage/total_tokens]}To get the value into a field, you can switch the merge tag to a Live Merge Tag and set it as the default value in a field. Live Merge Tags are provided by Gravity Forms Populate Anything.

Is it possible to show a progress bar/wheeel/please wait text, while waiting for gravitywiz to get an answer for my prompt from the openAI? Thank you

Do you mean on form submission or are you using Populate Anything’s Live Merge Tags?

I am using live merge tags. Without a visual feedback, the user might get confused. thanks

Hi Sara,

You can use the gppa_loading_target_meta javascript filter hook to display a spinner when live merge tag is populating a value. I hope this helps.

Best,

Thank you for pointing me to the right direction. unfortunately, the example at https://gravitywiz.com/documentation/gppa_loading_target_meta/#replace-live-merge-tags-individually-and-show-spinner does not explain exactly how i can show the spinner at a specific location (middle of the page). putting in the example code in the gravity perks javascript plugin does nothing to my form. a “real” JS example would be much appreciated.

Hi Sara,

The snippet adds the spinner in place of the Live Merge Tag value while it is loading. It only works inside HTML fields.

We do not currently have a solution for displaying the spinner in the middle of the page. If you are a Gravity Perks license holder, reach out to our support team and we’ll be happy to look into what it would take to add support for this.

How to integrate Dall-e? I don’t see a way to generate images.

Hi Joe,

This isn’t currently supported, but we are looking into adding it in a future update.

Something strange happened to my site when adding this. I was sending gravityform URL queries to another form and all of a sudden it wasnt sending checkboxes in the queries.

When I submitted my form (after manually adding in the correct checboxes) gravity view was not picking up on the checkboxes being ticked.

The only change I made to the site was installing openai. I deactivated it and it started working again.

Very strange.

Hello @David Smith,

I just bought Gravity Forms to use with OpenAI. It works great!

I have the same formatting problem as lewis rowlands but I can’t solve it with your answer. This is what I call the answer: https://prnt.sc/xn5GpygWcutT I get it as a string with no line break.

How to have the same formatting as in OpenAI (with line breaks)?

Thanks

Hi Vincent,

If David’s solution didn’t work for you, this will require some digging on your form setup. If you have an active Gravity Perks Advanced or Pro license, you can contact us via our support form.

Best,

Hello,

I’ve been looking for a form plugin to interact with Openai for quite some time and I think I’ve found it.

I want to ask a user to fill in some fields in a form that will be variables that I will use in an Openai prompt.

Ex : Field n°1 of my form = keyword Field n°2 of my form, ton of voice = fun OR serious, etc. Promtp to send at OpenAI = give me the definition of with this

After that, I want to display the results of the prompt, the answer of Openai, in the same page just after the form.

Do you think that is possible with gravity form and this plugin ?

Thanks for you help,

Hi Vincent,

Based on what you describe, this should be possible with our plugin!

Best,

It seems that the response back is not formatted. For example, when asking to create a recipe there are no line breaks so it comes back as one full paragraph.

Is there anyway to receive formatted text as it would be in openai playground?

Hey Lewis, if you map the value to a Paragraph field with the Rich Text Editor enabled, you’ll get full formatting preserved.

Hello Lewis, did you try David’s solution ? Bye, VL

How can you set this up to populate the output in to a another text field “after” you click submit? When I use Live Merge Tags, it’s sending the request and giving me the output back before I clicked submit … would be good if the form didn’t load a thank you submission or go to another confirmation page; just kept the form open so you can keep making requests.

Hey Sean, both “Edits” and “Completions” have the “Map Results to Field” setting. In this scenario, you wouldn’t use Live Merge Tags.

This sounds like the functionality Live Merge Tags offers you? Is the difference that you actually want to capture each input as an entry without actually submitted the form?

** Is the difference that you actually want to capture each input as an entry without actually submitted the form? **

Yes! Is that actually possible with Gravity Forms?

Not currently. Closest solution would be something like our Reload Form perk but the form would still be submitted, it just makes reloading the form very quick.

This could be even more useful if it allowed users to specify a fine-tuned or embedded model.

I agree! Our dev team is looking into what would be required to add support for this.

Agreed! We have plans to support fine-tuned models.

Curious, how do you see this working with embeddings? We’re still brainstorming on how to incorporate them.

Hi Danny,

Using fine-tuned models is now possible with version 1.0-alpha-1.4 of the plugin. Re-downloading the plugin should give you the latest version.

If you need a custom separator for your fine-tuned model, I recommend using the following snippet: https://gist.github.com/claygriffiths/1ae860d89d8349914a6b176912a2883e

Can I use this with a fine-tuned model?

Not yet! We plan on adding the ability to select fine-tuned models for completions soon.

Hi Amin,

Using fine-tuned models is now possible with version 1.0-alpha-1.4 of the plugin. Re-downloading the plugin should give you the latest version.

If you need a custom separator for your fine-tuned model, I recommend using the following snippet: https://gist.github.com/claygriffiths/1ae860d89d8349914a6b176912a2883e

I can’t for the life of me get this to work. I created a form with a single long paragraph for the user to paste a book blurb into, created a feed, used edits, added a prompt, chose to merge it to a results field, and then added that to a confirmations page. But all it does is give me the “thank you, we’ll be in touch shortly” which isn’t an option anywhere.

Does this require buying the live merge plugin too?

Hi Chelle,

It’s hard to say what might be happening without looking at your form configuration. If I had to guess, I would double-check the merge tag you’re using in the results field. If you’re a Gravity Perks customer, we’ll be happy to dig into this further. Drop us a line.

This plugin doesn’t require Populate Anything to function, but it does if you want to use Live Merge Tags to output the OpenAI response into a form field. Otherwise, you can use regular merge tags in confirmations and notifications.

so, i’m new to this chatgpt but have just seen how powerful it could be. can it for example, with this plugin, take a text or paraphrase field value from a live form and return a sentiment analysis on it , to another (hidden) field ?

i mean paragraph field !

HI Adnaan,

This works with Populate Anything, so you can use Live Merge tags to populate a Hidden field with the response. https://gravitywiz.com/gravity-forms-openai/comment-page-1/#populate-anything

Best,